Accurate positioning for self-driving cars

Is the car in the correct lane? How far away is the crosswalk? What a human driver usually estimates with a glance it can only be carried out by means of sophisticated sensors in self-driving cars. Fraunhofer FHR has now investigated what radar sensors are able to do in this regard. The result: They can be used to determine the car’s position to within a few centimeters.

If cars are to self-drive through roads and streets in the future, this will require a number of basic technologies. Among other tasks, the vehicle must be able to determine its position to within a few centimeters and, if necessary, create a map of its surroundings. This is also known as Simultaneous Localization and Mapping (SLAM) and it is particularly necessary in areas where the GPS signal is not accurate enough or the surroundings are unknown and no map material is available. The environment can usually be measured with three types of sensors: Optical cameras, LiDAR and radar. Optical cameras only work in bright light and with reasonably good visibility. LiDAR systems are bulky and expensive, and their results are not very reliable in poor weather conditions. Radar systems offer an alternative: They provide reliable results even in fog, heavy rain and darkness.

Investigation based on real test data

Can this kind of self-positioning be achieved in a car using radar sensors? This was investigated at Fraunhofer FHR. The utilized test data came from nuScenes, a large-scale public dataset for autonomous driving. This includes both radar and LiDAR data, as well as data from optical cameras. The framework originates from the field of robotics – after all, robots too have to be able to move in their environment without causing accidents, and to do this, they need to record their surroundings in great detail.

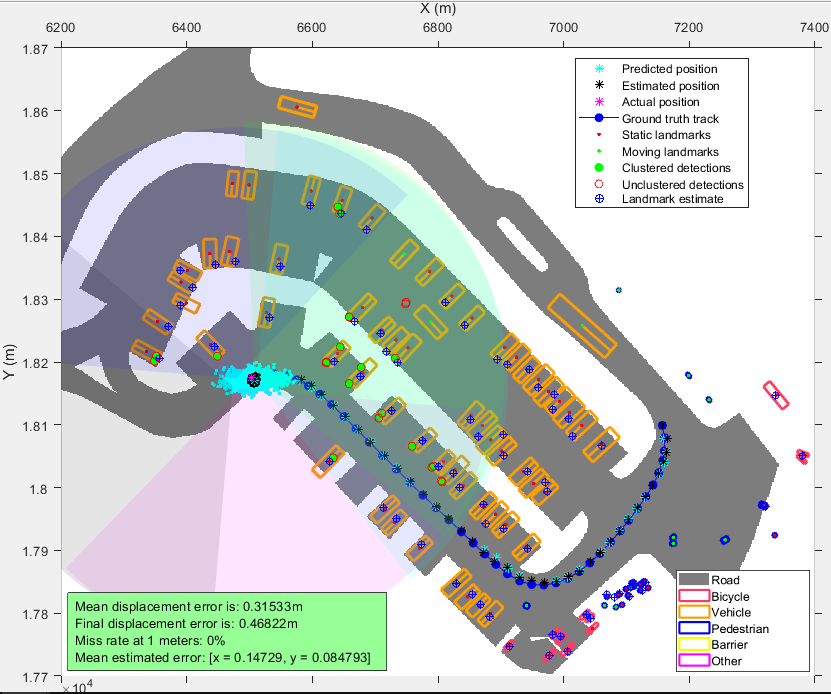

Since radar data is too large to evaluate each individual point, data belonging to one object is combined. This process is assisted by boundary frames created using sensor data from the camera and LiDAR. For example, if a bounding box is created for a car, all radar data within that box will be clustered. The work regarding SLAM shows: While self-location techniques that use only radar data and known landmarks allow for higher positioning accuracies, SLAM techniques also permit to simultaneously generate a map with radar data when no other sensor data is available. The position of the car can be determined up to 20-30 centimeters accuracy.