Drone navigation based on radar images

The reliability on the existing navigation systems is essential for the navigation of reconnaissance drones. If the global navigation satellite system is disturbed, the inertial navigation system can be supported by a process in real time processed radar images for radar image based navigation and the accuracy can be improved.

As there are often local disturbances in global satellite navigation systems in crisis areas, these systems cannot be used for navigation. The navigation of aircraft is only possible based on the data of the inertial navigation system in poor visibility conditions. This system consists of ring laser gyroscopes and acceleration sensors. The position is calculated based on the integration of the sensor information. Even though these systems are highly accurate nowadays, there is always a residual error that adds up to a more significant position deviation over time.

Military reconnaissance drones are frequently equipped with an all-weather imaging radar system used primarily for reconnaissance. Due to the computing power currently available, radar images with a reduced resolution can already be processed in real-time on board the drone and compared to digital map data. When prominent objects such as road crossings, rivers, or lakes are detected, they can be matched to the map data to determine the exact position of fixed points. If there are several of these fixed points, the exact position of the drone can be calculated based on the known geometry made up of distance measurements and angles of vision.

The Kalman filtering of the navigation data and the supporting point of the position generated by matching the radar images with the map data allow for correction and support of the drone's navigation system. This prevents the position from deviating over time.

Even though this process cannot reach the accuracy of satellite navigation systems, this navigation method provides sufficient precision to carry out a mission in a region with a disturbed satellite navigation system. The accuracy of the navigation depends on the precision of the real-time radar image generation as well as the number, position, and position accuracy of the fixed points found.

The basis for radar-based navigation is an imaging radar sensor with analyses in real-time. At Fraunhofer FHR, the radar system MIRANDA-35 has been used for this. It runs with a center frequency of 35 GHz and a bandwidth of up to 1500 MHz. It also has a data transmission path to the ground station through which the radar is controlled and the images of the online processor are transferred.

To test the method for radar image based navigation, two suitable data sets were recorded on a flight route of approximately 100 km each along with the associated raw navigation data. With these data sets further processing was first done offline to thoroughly test the methods and routines while ensuring their repeatability. First, the company IGI, the manufacturer of the navigation system, subtracted the GPS signal from the raw navigation data. Thus, a navigation data set was generated like one that would have been created in case of a failure of the satellite navigation system. With this, two navigation data sets were available for each SAR data set: on one hand, the original data set from the existing satellite navigation system and, on the other hand, the adapted data set without a usable GPS signal.

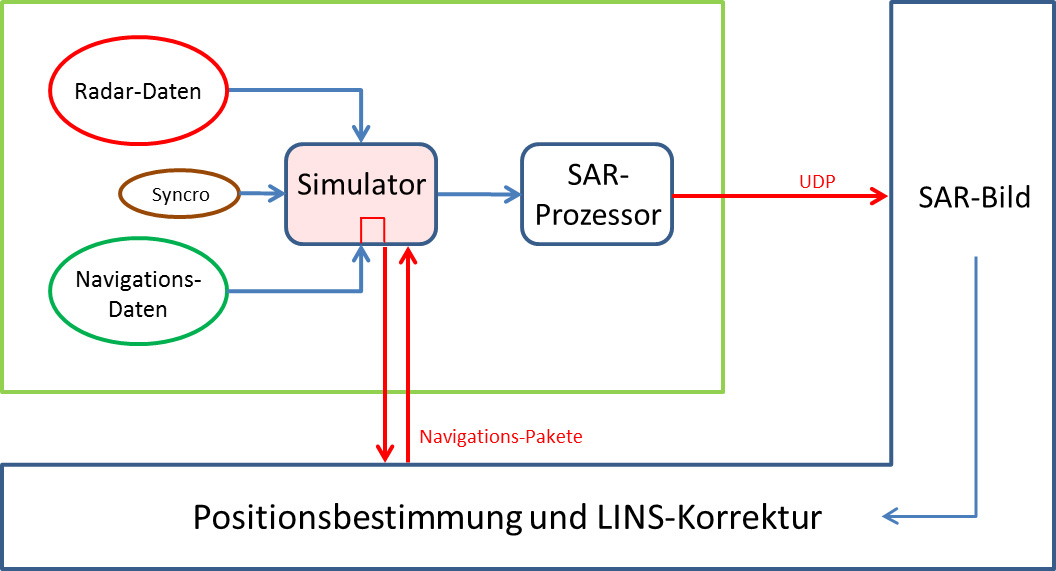

Then, the adapted navigation data sets were fed into the SAR processor together with the raw radar data set and processed. The generated radar images were transferred to a computer of the project partner Airbus, where they were matched with the map data and the position was determined. The results of this determination of position were forwarded to a Kalman filter together with the data of the navigation system. The Kalman filter then corrects the navigation data and in turn feeds the correction values into the SAR processor.

It was demonstrated that it is possible to support the on-board navigation system with radar images. Even though the accuracy of a satellite navigation system cannot be reached, a continuous deviation of the position is effectively prevented. The achievable position accuracy is more than sufficient for a smooth mission and can be ensured for the entire duration of the mission.